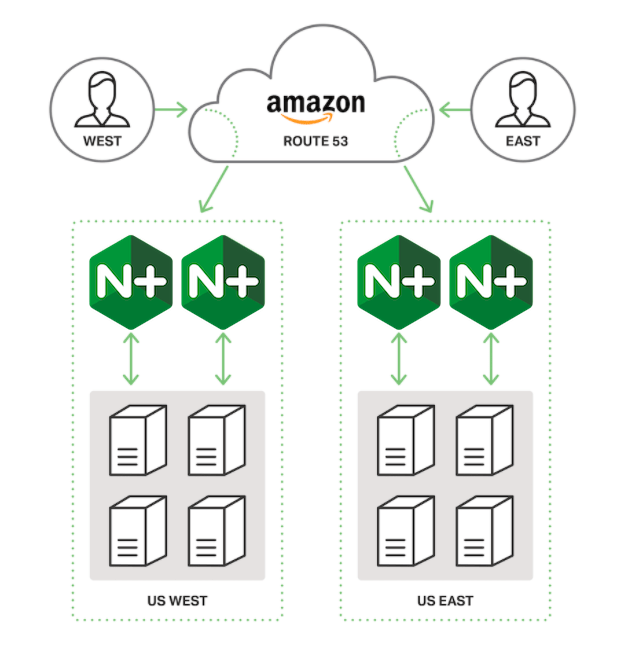

Global Server Load Balancing with Amazon Route 53 and NGINX Plus

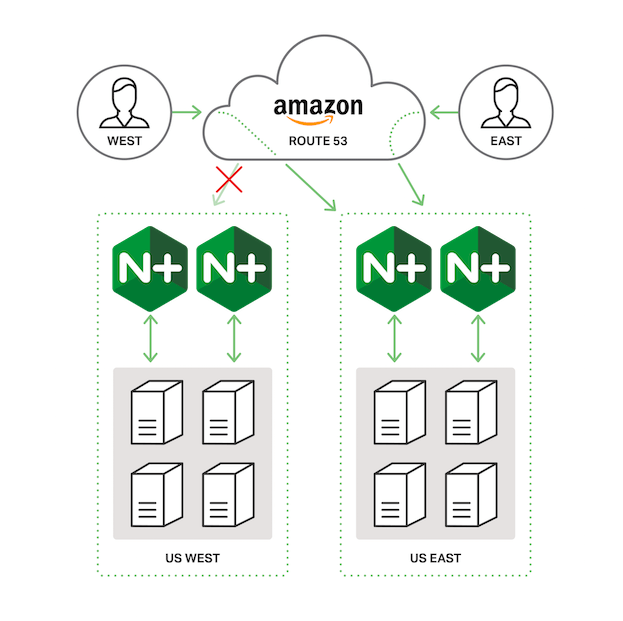

This deployment guide explains how to configure global server load balancing (GSLB) of traffic for web domains hosted in Amazon Elastic Compute Cloud (EC2). For high availability and improved performance, you set up multiple backend servers (web servers, application servers, or both) for a domain in two or more AWS regions. Within each region, NGINX Plus load balances traffic across the backend servers.

The AWS Domain Name System (DNS) service, Amazon Route 53, performs global server load balancing by responding to a DNS query from a client with the DNS record for the region that is closest to the client and hosts the domain. For best performance and predictable failover between regions, “closeness” is measured in terms of network latency rather than the actual geographic location of the client.

The Appendix provides instructions for creating EC2 instances with the names used in this guide, and installing and configuring the F5 NGINX software on them.

About NGINX Plus

NGINX Plus is the commercially supported version of the NGINX Open Source software. NGINX Plus is a complete application delivery platform, extending the power of NGINX with a host of enterprise‑ready capabilities that enhance an AWS application server deployment and are instrumental to building web applications at scale:

- Full‑featured HTTP, TCP, and UDP load balancing

- Intelligent session persistence

- High‑performance reverse proxy

- Caching and offload of dynamic and static content

- Adaptive streaming to deliver audio and video to any device

- Application-aware health checks and high availability

- Advanced activity monitoring available via a dashboard or API

- Management and real‑time configuration changes with DevOps‑friendly tools

Topology for Global Load Balancing with Amazon Route 53 and NGINX Plus

The setup for global server load balancing (GSLB) in this guide combines Amazon Elastic Compute Cloud (EC2) instances, Amazon Route 53, NGINX Plus instances, and NGINX Open Source instances.

Note: Global server load balancing is also sometimes called global load balancing (GLB). The terms are used interchangeably in this guide.

Route 53 is a Domain Name System (DNS) service that performs global server load balancing by routing each request to the AWS region closest to the requester’s location. This guide uses two regions: US West (Oregon) and US East (N. Virginia).

In each region, two or more NGINX Plus load balancers are deployed in a high‑availability (HA) configuration. In this guide, there are two NGINX Plus load balancer instances per region. You can also use NGINX Open Source for this purpose, but it lacks the application health checks that make for more precise error detection. For simplicity, we’ll refer to NGINX Plus load balancers throughout this guide, noting when features specific to NGINX Plus are used.

The NGINX Plus instances load balance traffic across web or app servers in their region. The diagram shows four backend servers, but you can deploy as many as needed. In this guide, there are two NGINX Open Source web servers in each region (four total); each one serves a customized static page identifying the server so you can track how load balancing is working.

Health checks are an integral feature of the configuration. Route 53 monitors the health of each NGINX Plus load balancer, marking it as down if a connection attempt times out or the HTTP response code is not 200 OK. Similarly, the NGINX Plus load balancer monitors the health of the upstream web servers and propagates those errors to Route 53. If both web servers or both NGINX Plus instances in a region are down, Route 53 fails over to the other region.

Prerequisites

The instructions assume you have the following:

- An AWS account.

- An NGINX Plus subscription, either purchased or a free 30-day trial.

- Familiarity with NGINX and NGINX Plus configuration syntax. Complete configuration snippets are provided, but not analyzed in detail.

- Eight EC2 instances, four in each of two regions. The Appendix provides instructions for creating instances with the expected names, and installing and configuring NGINX Plus and NGINX Open Source as appropriate.

Configuring Global Server Load Balancing

With the required AWS configuration in place, we’re ready to configure Route 53 for global server load balancing.

Complete step‑by‑step instructions are provided in the following sections:

- Creating a Hosted Zone

- Linking the Domain to EC2 Instances

- Configuring Health Checks for Route 53 Failover

- Configuring NGINX Plus Application Health Checks

Creating a Hosted Zone

Create a hosted zone, which basically involves designating a domain name to be managed by Route 53. As covered in the instructions, you can either use (transfer) an existing domain name or purchase a new one from Amazon.

Note: When you transfer an existing domain, it can take up to 48 hours for the updated DNS record to propagate. The propagation time is usually much shorter for a new domain.

-

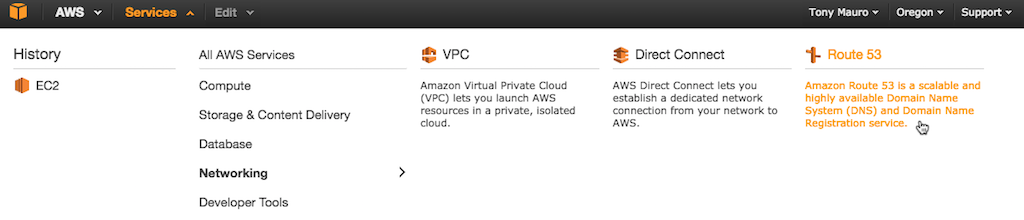

Log in to the AWS Management Console (console.aws.amazon.com/).

-

Access the Route 53 dashboard page by clicking Services in the top AWS navigation bar, mousing over Networking in the All AWS Services column and then clicking Route 53.

-

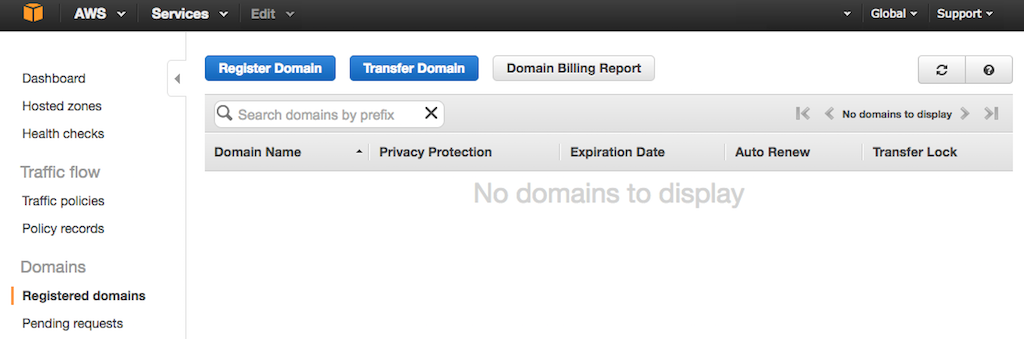

Depending on your history working with Route 53 instances, you might see the Route 53 Dashboard. Navigate to the Registered domains tab, shown here:

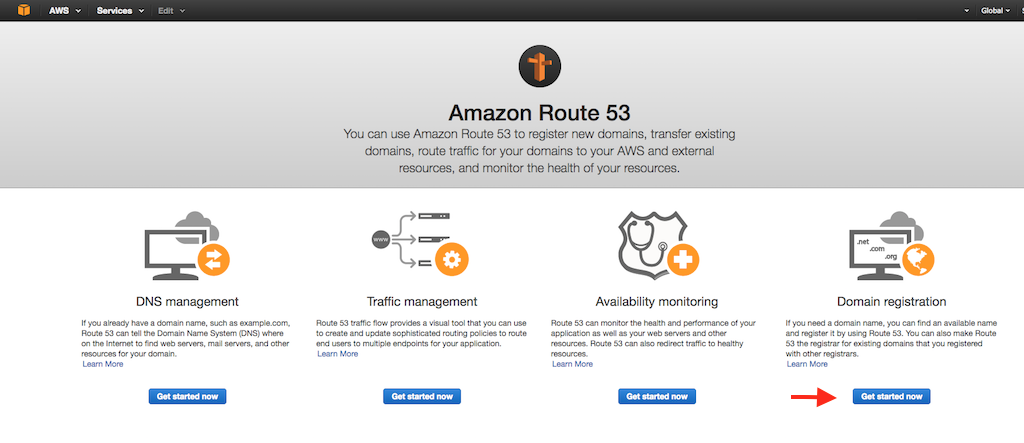

If you see the Route 53 home page instead, access the Registered domains tab by clicking the Get started now button under Domain registration.

-

On the Registered domains tab (the first figure in the Step 3), click either the Register Domain or Transfer Domain button as appropriate and follow the instructions.

-

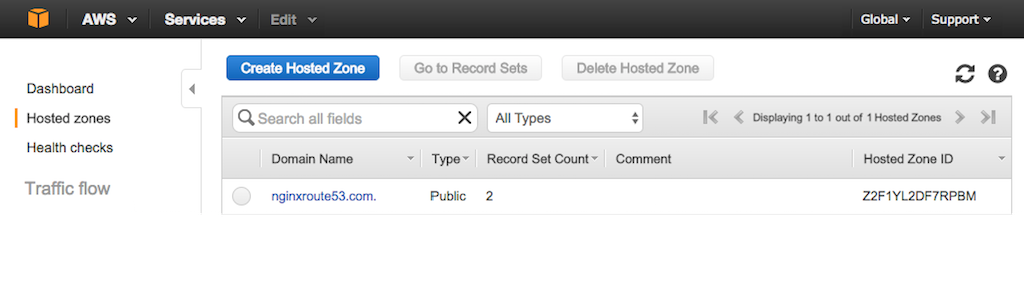

When you register or transfer a domain, AWS by default creates a hosted zone for it. To verify that there is a hosted zone for your domain, navigate to the Hosted Zones tab on the Route 53 dashboard. This example shows the domain we registered for this guide, nginxroute53.com.

(If no domain is listed, click the Create Hosted Zone button. The Create Hosted Zone column opens on the right side of the tab. Type the domain name in the Domain Name field and click the Create button at the bottom of the column.)

Linking the Domain to EC2 Instances

Now we link the domain to our EC2 instances so that content for the domain can be served from them. We do this by creating Route 53 record sets for the domain. To implement GSLB, in the record sets we specify Latency as the routing policy. This means that in response to a client query for DNS information about a domain, Route 53 sends the DNS records for the region hosting that domain in which servers are responding most quickly to addresses in the client’s IP range.

You can also select Geolocation as the routing policy, but failover might not work as expected. With the Geolocation routing policy, only clients from a specific a continent or country can access the content on a server. If you specify the United States, you also have the option of specifying a state as the “sublocation”, limiting access to users from only that state. In this case, you can also create a generic US location to catch all requests that aren’t sent from a listed sublocation.

We recommend that you choose Geolocation as the routing policy only for particular use cases, for example when you want to customize content for users in different countries – written in the country’s official language with prices in its currency, say. If your goal is to deliver content as quickly as possible, Latency remains the best routing policy.

Create records sets for your domain:

-

If you’re not already on the Hosted Zones tab of the Route 53 dashboard, navigate to it.

-

Click on the domain name in the Domain Name row for your hosted zone.

The tab changes to display the record sets for the domain.

-

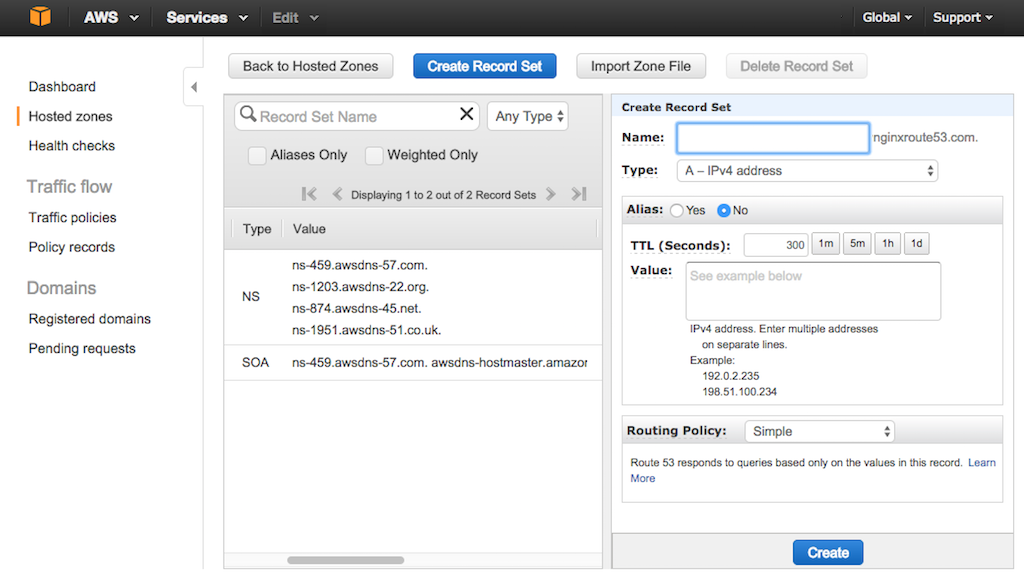

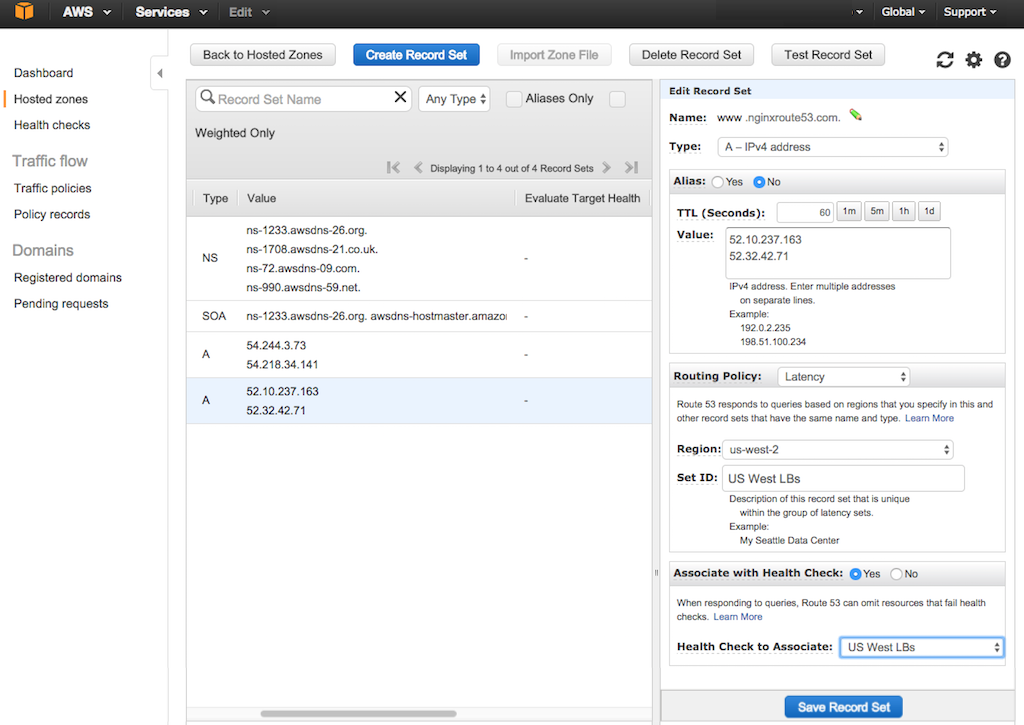

Click the Create Record Set button. The Create Record Set column opens on the right side of the tab, as shown here:

-

Fill in the fields in the Create Record Set column:

-

Name – You can leave this field blank, but for this guide we are setting the name to www.nginxroute53.com.

-

Type – A – IPv4 address.

-

Alias – No.

-

TTL (Seconds) – 60.

Note: Reducing TTL from the default of 300 in this way can decrease the time that it takes for Route 53 to fail over when both NGINX Plus load balancers in the region are down, but there is always a delay of about two minutes regardless of the TTL setting. This is a built‑in limitation of Route 53.

-

Value – Elastic IP addresses of the NGINX Plus load balancers in the first region [in this guide, US West (Oregon)].

-

Routing Policy – Latency.

-

-

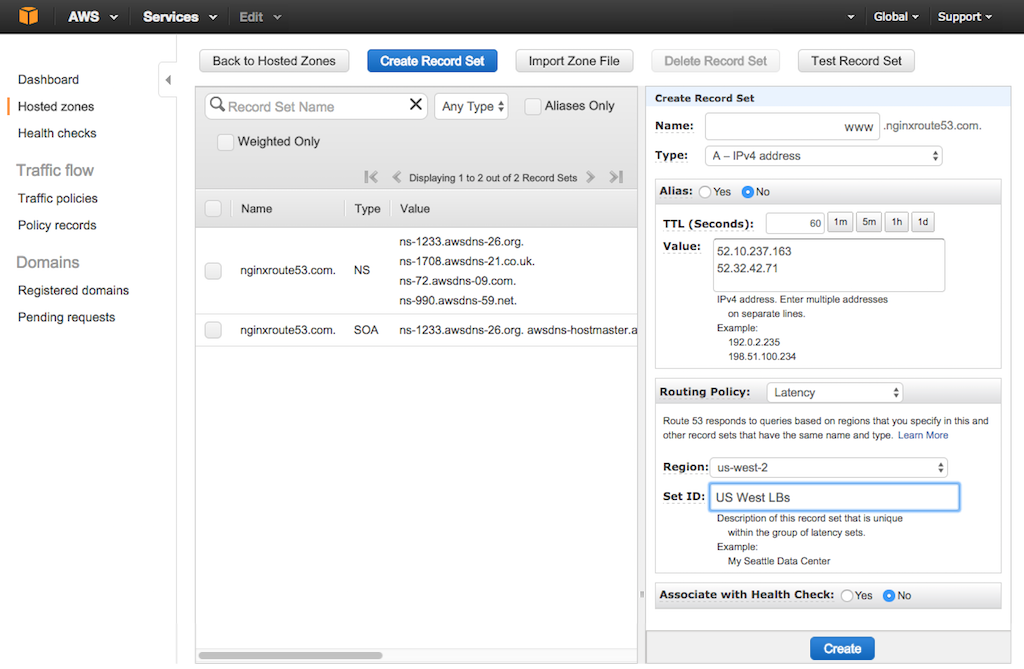

A new area opens when you select Latency. Fill in the fields as indicated (see the figure below):

- Region – Region to which the load balancers belong (in this guide, us-west-2).

- Set ID – Identifier for this group of load balancers (in this guide, US West LBs).

- Associate with Health Check – No.

When you complete all fields, the tab looks like this:

-

Click the Create button.

-

Repeat Steps 3 through 6 for the load balancers in the other region [in this guide, US East (N. Virginia)].

You can now test your website. Insert your domain name into a browser and see that your request is being load balanced between servers based on your location.

Configuring Health Checks for Route 53 Failover

To trigger failover between AWS regions, we next configure health checks in Route 53. Route 53 monitors the NGINX Plus load balancers and fails over to the next closest region if both NGINX Plus load balancers are timing out or returning HTTP status codes other than 200 OK. (In this guide, failover is to the other region, since there are only two.)

Note: It can take up to three minutes for Route 53 to begin routing traffic to another region. Although the TTL you specify in the record set for a region can slightly affect the amount of time, failover is never instantaneous because of the processing Route 53 must perform.

We create health checks both for each NGINX Plus load balancer individually and for the load balancers in each region as a pair. Then we update the record sets created in the previous section to refer to the health checks.

- Configuring Route 53 Health Checks for Individual Load Balancers

- Configuring Route 53 Health Checks for the Paired Load Balancers in Each Region

- Modifying Record Sets to Associate Them with the Newly Defined Health Checks

Configuring Route 53 Health Checks for Individual Load Balancers

-

Navigate to the Health checks tab on the Route 53 dashboard.

-

Click the Create health check button. In the Configure health check form that opens, specify the following values, then click the Next button.

- Name – Identifier for an NGINX Plus load balancer instance, for example US West LB 1.

- What to monitor – Endpoint.

- Specify endpoint by – IP address.

- IP address – The elastic IP address of the NGINX Plus load balancer.

- Port – The port advertised to clients for your domain or web service (the default is 80).

-

On the Get notified when health check fails screen that opens, set the Create alarm radio button to Yes or No as appropriate, then click the Create health check button.

-

Repeat Steps 2 and 3 for your other NGINX Plus load balancers (in this guide, US West LB 2, US East LB 1, and US East LB 2).

-

Proceed to the next section to configure health checks for the load balancer pairs.

Configuring Route 53 Health Checks for the Paired Load Balancers in Each Region

-

Click the Create health check button.

-

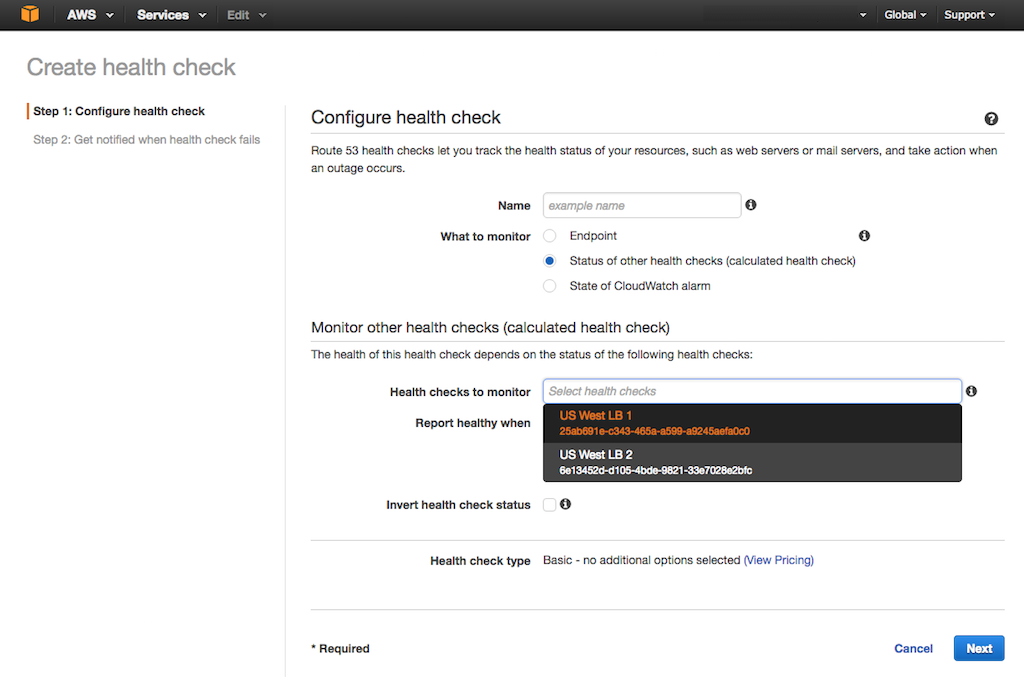

In the Configure health check form that opens, specify the following values, then click the Next button.

- Name – Identifier for the pair of NGINX Plus load balancers in the first region, for example US West LBs.

- What to monitor – Status of other health checks .

- Health checks to monitor – The health checks of the two US West load balancers (add them one after the other by clicking in the box and choosing them from the drop‑down menu as shown).

- Report healthy when – at least 1 of 2 selected health checks are healthy (the choices in this field are obscured in the screenshot by the drop‑down menu).

-

On the Get notified when health check fails screen that opens, set the Create alarm radio button as appropriate (see Step 5 in the previous section), then click the Create health check button.

-

Repeat Steps 1 through 3 for the paired load balancers in the other region [in this guide, US East (N. Virginia)].

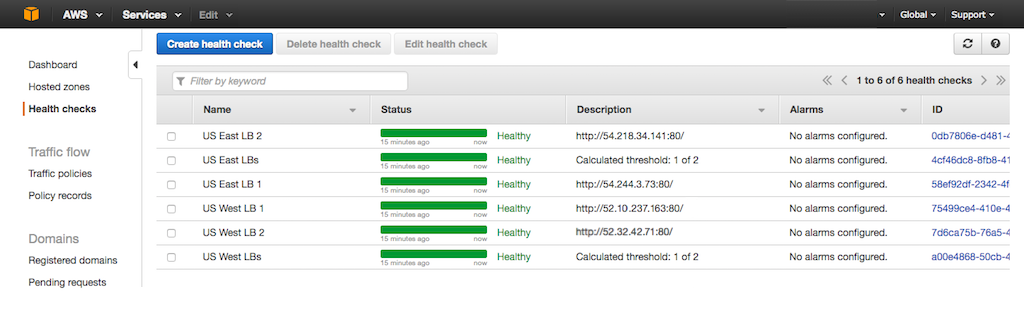

When you have finished configuring all six health checks, the Health checks tab looks like this:

Modifying Record Sets to Associate Them with the Newly Defined Health Checks

-

Navigate to the Hosted Zones tab.

-

Click on the domain name in the Domain Name row for your hosted zone.

The tab changes to display the record sets for the domain.

-

In the list of record sets that opens, click the row for the record set belonging to your first region [in this guide, US West (Oregon)]. The Edit Record Set column opens on the right side of the tab.

-

Change the Associate with Health Check radio button to Yes.

-

In the Health Check to Associate field, select the paired health check for your first region (in this guide, US West LBs).

-

Click the Save Record Set button.

Configuring NGINX Plus Application Health Checks

When you are using the NGINX Plus load balancer, we recommend that you to configure application health checks of your backend servers. You can configure NGINX Plus to check more than simply whether a server is responding or returning 5_xx_ – for example, checking whether the content returned by the server is correct. When a server fails a health check, NGINX Plus removes it from the load‑balancing rotation until it passes a configured number of consecutive health checks. If all backend servers are down, NGINX Plus sends a 5_xx_ error to Route 53, which triggers a failover to another region.

These instructions assume that you have configured NGINX Plus on two EC2 instances in each region, as instructed in Configuring NGINX Plus on the Load Balancers.

Note: Some commands require root privilege. If appropriate for your environment, prefix commands with the sudo command.

-

Connect to the US West LB 1 instance. For instructions, see Connecting to an EC2 Instance.

-

Change directory to /etc/nginx/conf.d.

cd /etc/nginx/conf.d -

Edit the west-lb1.conf file and add the @healthcheck location to set up health checks.

upstream backend-servers { server <public DNS name of Backend 1>; # Backend 1 server <public DNS name of Backend 2>; # Backend 2 zone backend-servers 64k; } server { location / { proxy_pass http://backend-servers; } location @healthcheck { proxy_pass http://backend-servers; proxy_connect_timeout 1s; proxy_read_timeout 1s; health_check uri=/ interval=1s; } }Directive documentation: location, proxy_connect_timeout, proxy_pass, proxy_read_timeout, server virtual, server upstream, upstream, zone

-

Verify the validity of the NGINX configuration and load it.

nginx -t nginx -s reload -

Repeat Steps 1 through 4 for the other three load balancers (US West LB 2, US East LB 1, and US East LB2).

In Step 3, change the filename as appropriate (west-lb2.conf, east-lb1.conf, and east-lb2.conf). In the east-lb1.conf and east-lb2.conf files, the

serverdirectives specify the public DNS names of Backup 3 and Backup 4.

Appendix

The instructions in this Appendix explain how to create EC2 instances with the names used in this guide, and then install and configure NGINX Open Source and NGINX Plus on them:

- Creating EC2 Instances and Installing the NGINX Software

- Configuring Elastic IP Addresses

- Configuring NGINX Open Source on the Backend Servers

- Configuring NGINX Plus on the Load Balancers

Creating EC2 Instances and Installing the NGINX Software

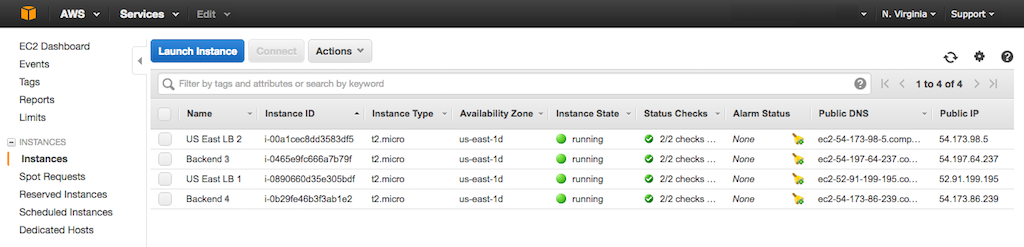

The deployment in this guide uses eight EC2 instances, four in each of two AWS regions. In each region, two instances run NGINX Plus to load balance traffic to the backend (NGINX Open Source) web servers running on the other two instances.

Step‑by‑step instructions for creating EC2 instances and installing NGINX software are provided in our deployment guide, Creating Amazon EC2 Instances for NGINX Open Source and NGINX Plus.

Note: When installing NGINX Open Source or NGINX Plus, you connect to each instance over SSH. To save time, leave the SSH connection to each instance open after installing the software, for reuse when you configure it with the instructions in the sections below.

Assign the following names to the instances, and then install the indicated NGINX software.

-

In the first region, which is US West (Oregon) in this guide:

-

Two load balancer instances running NGINX Plus:

- US West LB 1

- US West LB 2

-

Two backend instances running NGINX Open Source:

* <span style="color:#666666; font-weight:bolder; white-space: nowrap;">Backend 1</span>- Backend 2

-

-

In the second region, which is US East (N. Virginia) in this guide:

-

Two load balancer instances running NGINX Plus:

- US East LB 1

- US East LB 2

-

Two backend instances running NGINX Open Source:

* <span style="color:#666666; font-weight:bolder; white-space: nowrap;">Backend 3</span>- Backend 4

-

Here’s the Instances tab after we create the four instances in the N. Virginia region.

Configuring Elastic IP Addresses

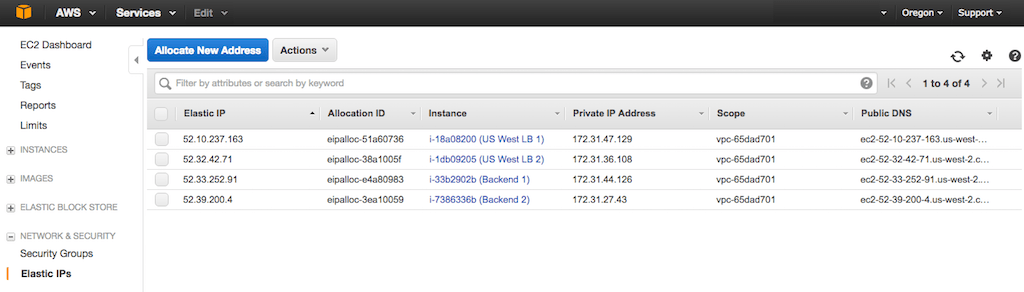

For some EC2 instance types (for example, on‑demand instances), AWS by default assigns a different IP address to an instance each time you shut it down and spin it back up. A load balancer must know the IP addresses of the servers to which it is forwarding traffic, so the default AWS behavior requires you either to set up a service‑discovery mechanism or to modify the NGINX Plus configuration every time you shut down or restart a backend instance. (A similar requirement applies to Route 53 when we shut down or restart an NGINX Plus instance.) To get around this inconvenience, assign an elastic IP address to each of the eight instances.

Note: AWS does not charge for elastic IP addresses as long as the associated instance is running. But when you shut down an instance, AWS charges a small amount to maintain the association to an elastic IP address. For details, see the Amazon EC2 Pricing page for your pricing model (for example, the Elastic IP Addresses section of the On‑Demand Pricing page).

Perform these steps on all eight instances.

-

Navigate to the Elastic IPs tab on the EC2 Dashboard.

-

Click the Allocate New Address button. In the window that pops up, click the Yes, Allocate button and then the Close button.

-

Associate the elastic IP address with an EC2 instance:

- Right‑click in the IP address’ row and select Associate Address from the drop‑down menu that appears.

- In the window that pops up, click in the Instance field and select an instance from the drop‑down menu.

Confirm your selection by clicking the Associate button.

After you complete the instructions on all instances, the list for a region (here, Oregon) looks like this:

Configuring NGINX Open Source on the Backend Servers

Perform these steps on all four backend servers: Backend 1, Backend 2, Backend 3, and Backend 4. In Step 3, substitute the appropriate name for Backend X in the index.html file.

Note: Some commands require root privilege. If appropriate for your environment, prefix commands with the sudo command.

-

Connect over SSH to the instance (or return to the terminal you left open after installing NGINX Open Source) and change directory to and change directory to your root directory. For the instance in this guide, it is /home/ubuntu.

cd /home/ubuntu -

Create a directory called public_html and change directory to it.

mkdir public_html cd public_html -

Using your preferred text editor, create a new file called index.html and add this text to it:

This is Backend X -

Change directory to /etc/nginx/conf.d.

cd /etc/nginx/conf.d -

Rename default.conf to default.conf.bak so that NGINX Plus does not load it.

mv default.conf default.conf.bak -

Create a new file called backend.conf and add this text, which defines the docroot for this web server:

server { root /home/ubuntu/public_html; } -

Verify the validity of the NGINX configuration and load it.

nginx -t nginx -s reload

Configuring NGINX Plus on the Load Balancers

Perform these steps on all four backend servers: US West LB 1, US West LB 2, US East LB 1, and US West LB 2.

Note: Some commands require root privilege. If appropriate for your environment, prefix commands with the sudo command.

-

Connect over SSH to the instance (or return to the terminal you left open after installing NGINX Plus) and change directory to /etc/nginx/conf.d.

cd /etc/nginx/conf.d -

Rename default.conf to default.conf.bak so that NGINX Plus does not load it.

mv default.conf default.conf.bak -

Create a new file containing the following text, which configures load balancing of the two backend servers in the relevant region. The filename on each instance is:

- For US West LB 1 – west-lb1.conf

- For US West LB 2 – west-lb2.conf

- For US East LB 1 – east-lb1.conf

- For US West LB 2 – east-lb2.conf

In the

serverdirectives in theupstreamblock, substitute the public DNS names of the backend instances in the region; to learn them, see the Instances tab in the EC2 Dashboard.upstream backend-servers { server <public DNS name of Backend 1>; # Backend 1 server <public DNS name of Backend 2>; # Backend 2 } server { location / { proxy_pass http://backend-servers; } }Directive documentation: location, proxy_pass, server virtual, server upstream, upstream

-

Verify the validity of the NGINX configuration and load it.

nginx -t nginx -s reload -

To test that the configuration is working correctly, for each load balancer enter its public DNS name in the address field of your web browser. As you access the load balancer repeatedly, the content of the page alternates between “This is Backend 1” and “This is Backend 2” in your first region, and “This is Backend 3” and “This is Backend 4” in the second region.

Now that all eight EC2 instances are configured and local load balancing is working correctly, we can set up global server load balancing with Route 53 to route traffic based on the IP address of the requesting client.

Return to main instructions, Configuring Global Server Load Balancing

Revision History

- Version 3 (April 2018) – Reorganization of Appendix

- Version 2 (January 2017) – Clarified information about root permissions; miscellaneous fixes (NGINX Plus Release 11)

- Version 1 (October 2016) – Initial version (NGINX Plus Release 10)